GEV responses#

In this tutorial, we illustrate how to set up a distributional regression model with the generalized extreme value distribution as a response distribution. First, we simulate some data in R:

The location parameter (\(\mu\)) is a function of an intercept and a non-linear covariate effect.

The scale parameter (\(\sigma\)) is a function of an intercept and a linear effect and uses a log-link.

The shape or concentration parameter (\(\xi\)) is a function of an intercept and a linear effect.

After simulating the data, we can configure the model with a single call

to the rliesel::liesel() function.

library(rliesel)

Please set your Liesel venv, e.g. with use_liesel_venv()

library(VGAM)

Loading required package: stats4

Loading required package: splines

set.seed(1337)

n <- 1000

x0 <- runif(n)

x1 <- runif(n)

x2 <- runif(n)

y <- rgev(

n,

location = 0 + sin(2 * pi * x0),

scale = exp(-3 + x1),

shape = 0.1 + x2

)

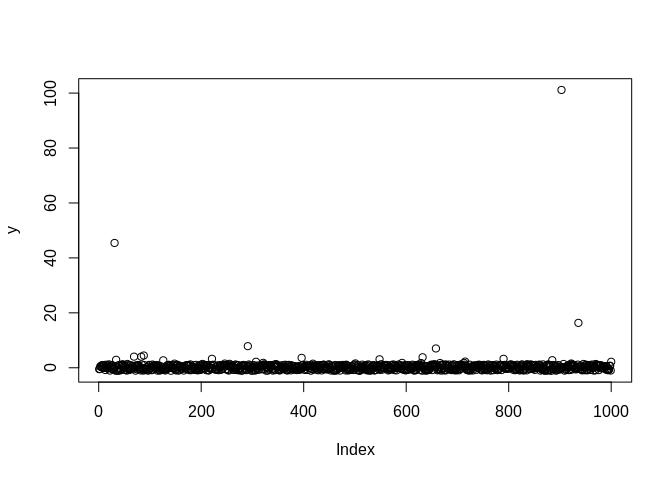

plot(y)

model <- liesel(

response = y,

distribution = "GeneralizedExtremeValue",

predictors = list(

loc = predictor(~ s(x0)),

scale = predictor(~ x1, inverse_link = "Exp"),

concentration = predictor(~ x2)

)

)

Now, we can continue in Python and use the lsl.dist_reg_mcmc()

function to set up a sampling algorithm with IWLS kernels for the

regression coefficients (\(\boldsymbol{\beta}\)) and a Gibbs kernel for

the smoothing parameter (\(\tau^2\)) of the spline. Note that we need to

set \(\beta_0\) for \(\xi\) to 0.1 manually, because \(\xi = 0\) breaks the

sampler.

import liesel.model as lsl

import jax.numpy as jnp

model = r.model

# concentration == 0.0 seems to break the sampler

model.vars["concentration_p0_beta"].value = jnp.array([0.1, 0.0])

builder = lsl.dist_reg_mcmc(model, seed=42, num_chains=4)

builder.set_duration(warmup_duration=1000, posterior_duration=1000)

engine = builder.build()

engine.sample_all_epochs()

liesel.goose.engine - INFO - Starting epoch: FAST_ADAPTATION, 75 transitions, 25 jitted together

liesel.goose.engine - WARNING - Errors per chain for kernel_00: 9, 11, 4, 5 / 75 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_01: 0, 0, 1, 0 / 75 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_04: 2, 0, 0, 0 / 75 transitions

liesel.goose.engine - INFO - Finished epoch

liesel.goose.engine - INFO - Starting epoch: SLOW_ADAPTATION, 25 transitions, 25 jitted together

liesel.goose.engine - WARNING - Errors per chain for kernel_00: 1, 1, 2, 4 / 25 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_01: 2, 1, 1, 0 / 25 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_03: 1, 1, 1, 3 / 25 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_04: 1, 2, 2, 1 / 25 transitions

liesel.goose.engine - INFO - Finished epoch

liesel.goose.engine - INFO - Starting epoch: SLOW_ADAPTATION, 50 transitions, 25 jitted together

liesel.goose.engine - WARNING - Errors per chain for kernel_00: 1, 3, 2, 2 / 50 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_01: 0, 1, 1, 2 / 50 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_03: 2, 1, 1, 1 / 50 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_04: 1, 0, 1, 1 / 50 transitions

liesel.goose.engine - INFO - Finished epoch

liesel.goose.engine - INFO - Starting epoch: SLOW_ADAPTATION, 100 transitions, 25 jitted together

liesel.goose.engine - WARNING - Errors per chain for kernel_00: 1, 2, 1, 1 / 100 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_01: 1, 1, 2, 2 / 100 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_03: 1, 0, 1, 2 / 100 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_04: 1, 1, 1, 0 / 100 transitions

liesel.goose.engine - INFO - Finished epoch

liesel.goose.engine - INFO - Starting epoch: SLOW_ADAPTATION, 200 transitions, 25 jitted together

liesel.goose.engine - WARNING - Errors per chain for kernel_00: 1, 2, 1, 1 / 200 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_01: 0, 1, 2, 2 / 200 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_03: 1, 1, 1, 1 / 200 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_04: 3, 1, 2, 1 / 200 transitions

liesel.goose.engine - INFO - Finished epoch

liesel.goose.engine - INFO - Starting epoch: SLOW_ADAPTATION, 500 transitions, 25 jitted together

liesel.goose.engine - WARNING - Errors per chain for kernel_00: 2, 3, 2, 2 / 500 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_01: 1, 2, 1, 2 / 500 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_03: 1, 1, 1, 2 / 500 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_04: 1, 1, 1, 1 / 500 transitions

liesel.goose.engine - INFO - Finished epoch

liesel.goose.engine - INFO - Starting epoch: FAST_ADAPTATION, 50 transitions, 25 jitted together

liesel.goose.engine - WARNING - Errors per chain for kernel_00: 0, 1, 1, 1 / 50 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_01: 0, 1, 1, 0 / 50 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_03: 1, 1, 0, 1 / 50 transitions

liesel.goose.engine - WARNING - Errors per chain for kernel_04: 1, 0, 0, 1 / 50 transitions

liesel.goose.engine - INFO - Finished epoch

liesel.goose.engine - INFO - Finished warmup

liesel.goose.engine - INFO - Starting epoch: POSTERIOR, 1000 transitions, 25 jitted together

liesel.goose.engine - WARNING - Errors per chain for kernel_00: 2, 0, 1, 1 / 1000 transitions

liesel.goose.engine - INFO - Finished epoch

Some tabular summary statistics of the posterior samples:

import liesel.goose as gs

results = engine.get_results()

gs.Summary(results)

Parameter summary:

| kernel | mean | sd | q_0.05 | q_0.5 | q_0.95 | sample_size | ess_bulk | ess_tail | rhat | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| parameter | index | ||||||||||

| concentration_p0_beta | (0,) | kernel_00 | 0.069 | 0.050 | -0.013 | 0.069 | 0.154 | 4000 | 320.788 | 699.209 | 1.007 |

| (1,) | kernel_00 | 1.067 | 0.100 | 0.906 | 1.068 | 1.234 | 4000 | 143.899 | 537.567 | 1.018 | |

| loc_np0_beta | (0,) | kernel_03 | 0.597 | 0.227 | 0.226 | 0.595 | 0.976 | 4000 | 45.973 | 86.488 | 1.078 |

| (1,) | kernel_03 | 0.325 | 0.122 | 0.120 | 0.325 | 0.523 | 4000 | 97.935 | 221.720 | 1.052 | |

| (2,) | kernel_03 | -0.365 | 0.129 | -0.620 | -0.353 | -0.172 | 4000 | 36.305 | 69.750 | 1.095 | |

| (3,) | kernel_03 | 0.362 | 0.064 | 0.250 | 0.365 | 0.471 | 4000 | 59.497 | 137.102 | 1.057 | |

| (4,) | kernel_03 | -0.244 | 0.086 | -0.385 | -0.247 | -0.102 | 4000 | 45.301 | 149.220 | 1.103 | |

| (5,) | kernel_03 | 0.170 | 0.031 | 0.121 | 0.168 | 0.220 | 4000 | 34.185 | 134.045 | 1.118 | |

| (6,) | kernel_03 | 6.027 | 0.038 | 5.971 | 6.025 | 6.092 | 4000 | 67.033 | 242.333 | 1.037 | |

| (7,) | kernel_03 | 0.546 | 0.071 | 0.431 | 0.548 | 0.660 | 4000 | 33.249 | 176.184 | 1.122 | |

| (8,) | kernel_03 | 1.702 | 0.029 | 1.656 | 1.701 | 1.751 | 4000 | 65.259 | 185.559 | 1.038 | |

| loc_np0_tau2 | () | kernel_02 | 6.286 | 5.870 | 2.419 | 5.087 | 13.440 | 4000 | 3623.896 | 3754.696 | 1.000 |

| loc_p0_beta | (0,) | kernel_04 | 0.027 | 0.003 | 0.023 | 0.027 | 0.032 | 4000 | 74.594 | 63.512 | 1.060 |

| scale_p0_beta | (0,) | kernel_01 | -3.063 | 0.060 | -3.160 | -3.065 | -2.958 | 4000 | 79.826 | 132.958 | 1.045 |

| (1,) | kernel_01 | 1.040 | 0.077 | 0.910 | 1.042 | 1.166 | 4000 | 133.089 | 255.412 | 1.018 |

Error summary:

| count | relative | ||||

|---|---|---|---|---|---|

| kernel | error_code | error_msg | phase | ||

| kernel_00 | 90 | nan acceptance prob | warmup | 67 | 0.017 |

| posterior | 4 | 0.001 | |||

| kernel_01 | 90 | nan acceptance prob | warmup | 28 | 0.007 |

| posterior | 0 | 0.000 | |||

| kernel_03 | 90 | nan acceptance prob | warmup | 27 | 0.007 |

| posterior | 0 | 0.000 | |||

| kernel_04 | 90 | nan acceptance prob | warmup | 27 | 0.007 |

| posterior | 0 | 0.000 |

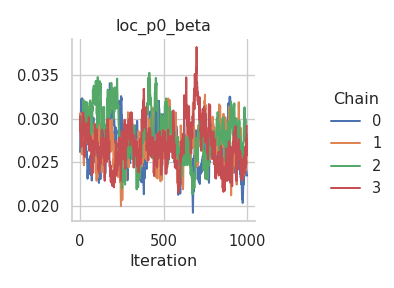

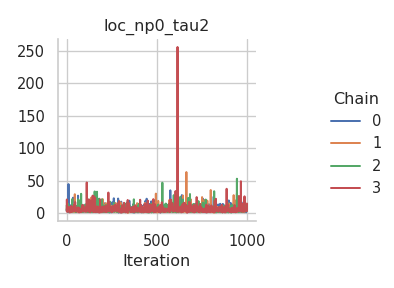

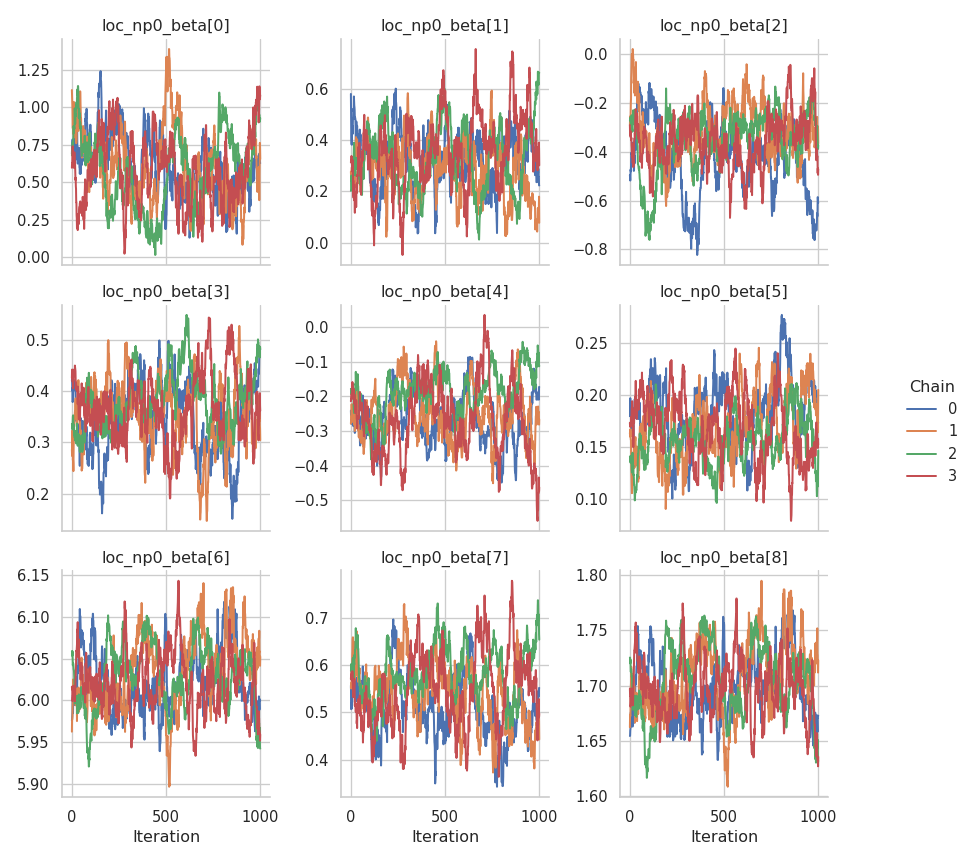

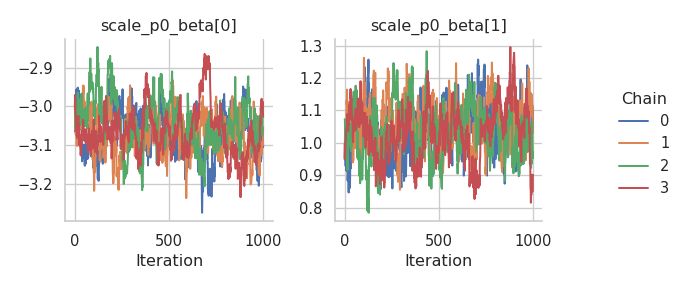

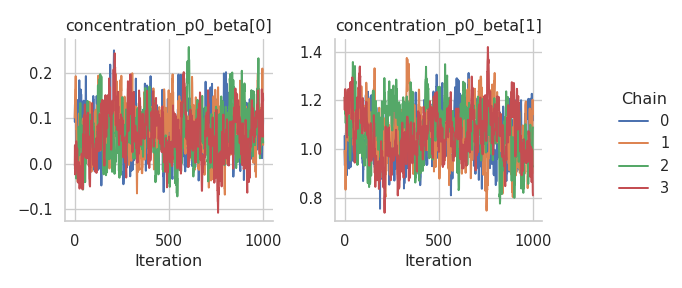

And the corresponding trace plots:

fig = gs.plot_trace(results, "loc_p0_beta")

fig = gs.plot_trace(results, "loc_np0_tau2")

fig = gs.plot_trace(results, "loc_np0_beta")

fig = gs.plot_trace(results, "scale_p0_beta")

fig = gs.plot_trace(results, "concentration_p0_beta")

We need to reset the index of the summary data frame before we can transfer it to R.

summary = gs.Summary(results).to_dataframe().reset_index()

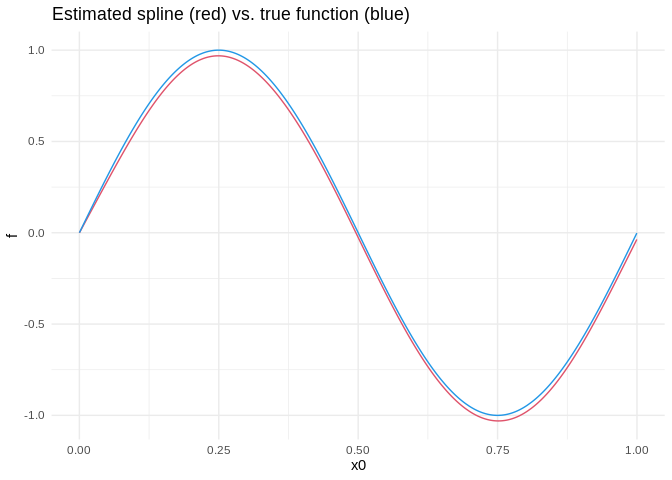

After transferring the summary data frame to R, we can process it with packages like dplyr and ggplot2. Here is a visualization of the estimated spline vs. the true function:

library(dplyr)

Attaching package: 'dplyr'

The following objects are masked from 'package:stats':

filter, lag

The following objects are masked from 'package:base':

intersect, setdiff, setequal, union

library(ggplot2)

library(reticulate)

summary <- py$summary

beta <- summary %>%

filter(variable == "loc_np0_beta") %>%

group_by(var_index) %>%

summarize(mean = mean(mean)) %>%

ungroup()

beta <- beta$mean

X <- py_to_r(model$vars["loc_np0_X"]$value)

estimate <- X %*% beta

true <- sin(2 * pi * x0)

ggplot(data.frame(x0 = x0, estimate = estimate, true = true)) +

geom_line(aes(x0, estimate), color = palette()[2]) +

geom_line(aes(x0, true), color = palette()[4]) +

ggtitle("Estimated spline (red) vs. true function (blue)") +

ylab("f") +

theme_minimal()